Moving beyond AI model performance

Several years ago, the team at Microsoft developed a tool to help blind children ‘see’ and interact with others; they used two machine learning models with very high performance (>90%). However, when those two models were combined and used by kids, the performance was less than 10%.

The team then realized they had to measure more than just AI model performance; they needed to measure the AI system performance.

AI performance needs to mean more than just model accuracy

It’s easy to forget that only a few years ago we would celebrate if an algorithm could correctly distinguish a picture of a cat from a dog more than 80% of the time. This was a huge achievement, and it was a milestone to be excited about. An AI model is often judged on accuracy, along with the other metrics we’ve discussed like the sensitivity/recall/TPR, AUC, and the precision/PPV. But as AI becomes part of our lives and especially in high-risk fields like healthcare, we need to think of performance more broadly than just its ability to predict or classify.

The complexity in medicine demands metrics that measure the performance of the entire AI system. One can easily imagine a healthcare scenario in which there are multiple AI systems interacting. For example, a cardiology clinic uses one AI model and the ER has another for triage, and the nurses, doctors, and other healthcare professionals interact with each one.

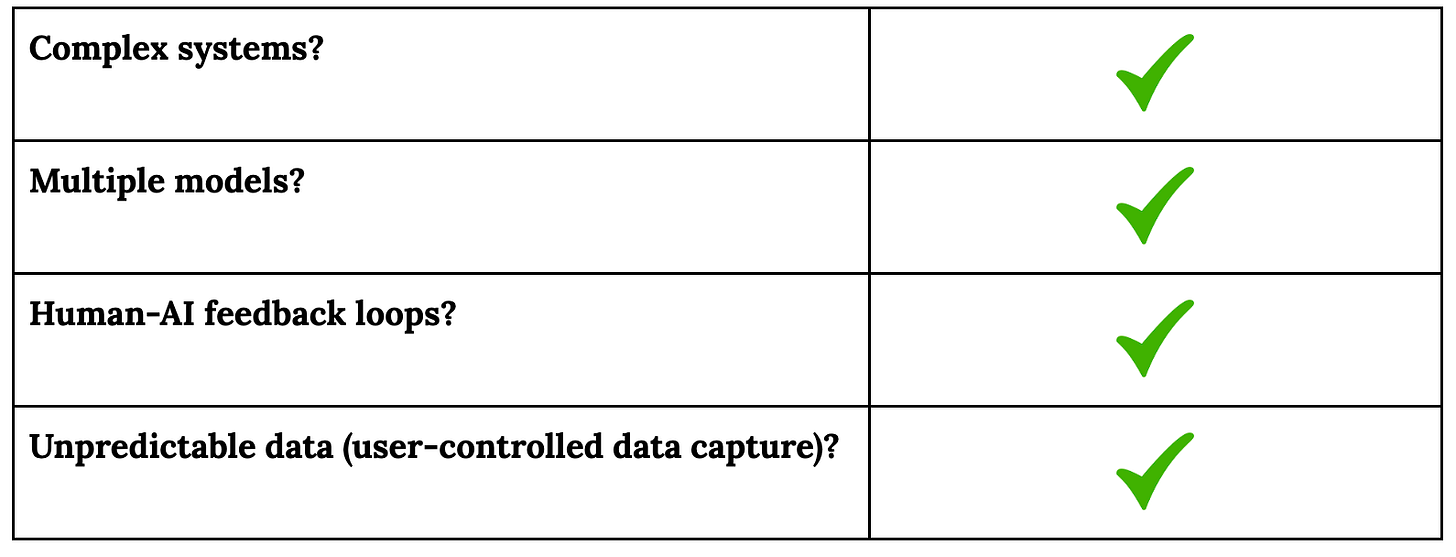

The Microsoft team states: “It is essential for development teams to test on realistic datasets to understand how the AI system generates the actual user experience. This is especially important with complex systems, where multiple models, human-AI feedback loops, or unpredictable data (e.g., user-controlled data capture) can cause the AI system to respond unpredictably”

Common healthcare scenarios that contribute to AI system performance

System factors will be more impactful AI model performance

The performance of the model often will not be nearly as important as:

How quickly information from the model can be accessed

How the clinician interprets the model output

How the clinician explains the output the the patient and/or other clinicians

The performance of the clinician-model dyad

Some authors point out that metrics are often proxies for what we really want, and they have inherent limitations. They suggest that organizations using AI:

“• Use a slate of metrics to get a fuller picture

• Conduct external algorithmic audits.

• Combine with qualitative accounts.

•Involve a range of stakeholders, including those who will be most impacted.”

They also note that “some of the most important qualitative information will come from those most impacted by an algorithmic system and includes key stakeholders who are often overlooked.” In a medical setting, this category would include clinicians, hospital/clinic staff, patients, and families, all of whom must have input to make the AI system beneficial and reliable.

One way the Microsoft team solved their tool’s poor performance during ‘typical use’ was to give domain experts (in this case patients and families) a way to provide feedback. This is different from Human Reinforcement Learning; it’s focused on the system rather than the model itself. Involving physicians, nurses, and other healthcare professionals in the optimization of the AI system will be crucial to maximizing AI’s benefit to patients and families.

The need for standardized AI model information in healthcare

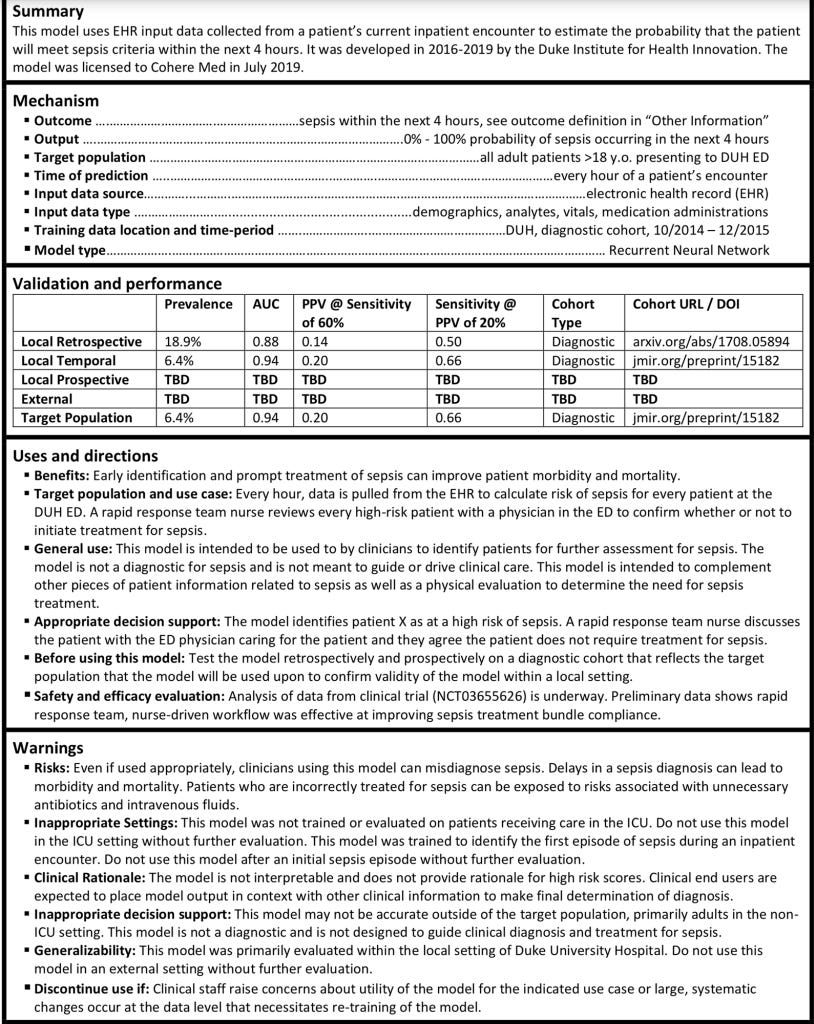

To make the issue of evaluating and optimizing AI performance more challenging, some authors note that “Currently, there are no standardized documentation procedures to communicate the performance characteristics of trained machine learning (ML) and artificial intelligence (AI) models.” In contrast, in more established industries like hardware engineering, customers are often given a data sheet with information about the product including how it performs in different situations.

One group of authors proposes a framework of “Model Cards”, which ideally would be standard information any healthcare practitioner or system would receive prior to purchasing or incorporating an AI model into their system. This concept would decrease the burden on physicians and others reviewing new AI technology. The full card is copied below.

As we saw in last week’s review of performance transparency, companies provide a wide range of AI/model information in disparate locations and in many formats. This makes it exceedingly difficult to compare equivalent information, which in some cases may actually be the point. The weaker companies likely know that their consumers are not yet as sophisticated as they will be in a few years, and think they can get away with providing general assurances that the technology “works”.

Moving toward toward a standard for AI model and system performance

Ideally, a standardized and thorough description of an AI model would be part of a larger approach to AI performance in which:

An AI system is evaluated during ‘typical use’ in specific settings

System factors are identified that contribute to holistic key performance indicators

An AI system is monitored and user feedback is incorporated to maximize benefit to healthcare workers, patients, and their families.

To summarize, this month we’ve looked at:

Week 1: Overview of AI Performance

Week 2: Journal articles about AI Performance with a focus on ECG

Week 3: Performance metric transparency of ECG-interpretation companies

Week 4: Looking ahead to the future of AI performance evaluation

What information would you like to have about these products? Where do you see this field evolving?