The AI Governance Satisfaction Gap

What Clinical Work Can Teach Us About Building Satisfying, Effective AI Oversight

The Satisfaction Gap: From Checkbox Governance to Meaningful AI Evaluation

In clinical care, there’s a particular kind of satisfaction that comes from moving through a sequence of steps where the outcome is clear and the impact is immediate. A child presents with arm pain. An X-ray is ordered. A hairline fracture is identified. A cast is placed. The room exhales. There’s a sense that something real has happened—problem identified, solution implemented, outcome verified. A meaningful task completed with purpose.

My son’s recent broken arm. Pro tip: Do not body slam your older brother. But also - his care team seemed very satisfied taking care of him!

Now contrast that with the world of healthcare AI governance. Here, the checkboxes multiply: multiple-choice attestations, risk scoring rubrics, bias mitigation plans, reproducibility forms. Hours may go into completing these frameworks, yet it’s often unclear if they meaningfully reduce harm or improve patient outcomes. The work can feel disconnected from the people it’s meant to help.

This is what I call the “satisfaction gap” of AI governance—the psychological distance between the work performed and the confidence that it matters. In clinical care, each step ladders up to a tangible result. In governance, we often operate in the abstract, hoping the scaffolding we build will hold under pressure but rarely seeing the results firsthand.

And yet both are necessary. One heals a broken bone. The other, ideally, prevents a broken system. The challenge is making governance feel less like bureaucracy and more like care.

The Anatomy of Satisfaction

What makes clinical tasks satisfying? A major driver is providing excellent care to patients, according to an AMA study on burnout. We know from other burnout literature that perceived impact, visible results, and meaningful work are important aspects of preventing burnout. There’s also a dopamaine release from clear impact, and even some oxytocin from the connection and help for our patients. Humans like to clearly help other people.

Current approaches to healthcare AI governance seem almost perfectly designed to eliminate these satisfaction triggers. Multiple-choice evaluations with arbitrary scoring thresholds seem disconnected from real life. Documentation requirements with no clear connection to improved safety seem like a clerical task, which echoes the 29% increase in burnout with the introduction of computerized order entry.

"I spent six hours evaluating an AI tool last month," a colleague recently told me. "I have absolutely no idea if my input changed anything or improved patient care in any way. It's worse than insurance paperwork—at least with insurance, you eventually get an approval or denial."

This disconnection creates more than frustration; it breeds cynicism about the entire enterprise of AI governance. If we can't see how our AI evaluations improve outcomes, why invest precious time and energy?

The EHR Cautionary Tale

We've been here before. Two decades ago, electronic health records promised transformed care through data aggregation and analysis. Today, EHRs are consistently cited among the top contributors to physician burnout.

What happened? The connection between daily documentation work and its supposed benefits became too abstract. Data entry optimized for billing rather than clinical workflow. The goal of improving patient care got lost in the day-to-day tasks.

AI governance risks the same fate if we don't learn this lesson: processes disconnected from visible outcomes will always feel burdensome rather than meaningful.

The Necessary but Uncomfortable Journey to Competence

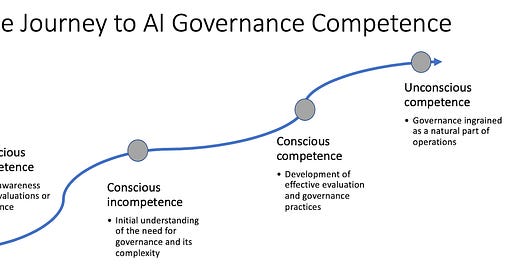

Yet here's our dilemma: we cannot skip this phase. We're in the middle of the Journey to Competence, which is that uncomfortable middle space between recognizing a need (effective AI evaluation and governance) and developing mature systems to address it.

Right now, we’re at Conscious Incompetence and moving toward Conscious Competence: we know we need to develop better approaches to governance and are at the beginning stages of figuring out how that looks.

From a practical standpoint, we can manage multiple-choice assessments, basic bias evaluations, vendor self-attestations, and (maybe) rudimentary outcome measures. That feels far from where we want to be: governance that directly and visibly improves patient outcomes. Between lies essential but potentially unsatisfying work like establishing baselines, building evaluation infrastructure, developing institutional knowledge. The fact that this process exists in every field should give us the vision and fortitude to continue toward a more meaningful place.

Bridging the Gap

How do we make AI governance more satisfying while we navigate this necessary middle phase?

First, create clear lines of sight between AI evaluation and clinical outcomes. Often the end users in a system are ‘voluntold’ that they need to test a new software, then struggle to make sense of it, then fill out a questionnaire. Sometimes they see that product again, sometimes they don’t. If clinicians spend hours evaluating an AI tool, we owe them a summary of exactly how their input shaped the final product. We have to make the connection between governance activities and patient benefits explicit and tangible.

Second, build rapid feedback loops into governance processes. Rather than year-long evaluation cycles, implement shorter, iterative assessments with visible impacts. And when they make suggestions for improvements or feedback, we owe them a response, even if that change isn’t possible. If clinicians see the value of their impact, they’ll be partners in improving the product and system. This mimics the satisfaction cycle of clinical medicine of action, connection, and visible results.

Embracing the Uncomfortable Middle

Frustration with AI governance doesn't mean it’s not worthwhile; it simply means we're in the middle of the path. Like learning any complex skill, the beginning is awkward, frustrating, and often unsatisfying.

Our task is not to eliminate this gap, but to design governance approaches that acknowledge basic human psychology. We need evaluation processes that connect visibly to outcomes, provide meaningful feedback, and support our professional identity.

Only then will AI governance checkboxes begin to feel more like that X-ray order—a satisfying step in a process clearly connected to better patient care, rather than bureaucratic busywork disconnected from our purpose as physicians. The satisfaction isn't just a "nice to have"—it's the fuel that will sustain the difficult work of responsible innovation.

We have to remember that the “test with end users” box at the end of every AI governance slide involves real people with real needs for satisfying and meaningful impact. Let’s make sure AI governance doesn’t feel like just another checkbox.