Healthcare AI Risk: The NIST AI Risk Framework

Physician-friendly AI concepts from Machine Learning for MDs

In January 2023, the National Institute for Standards and Technology published the AI Risk Management Framework 1.0, and in March launched the related Trustworthy AI Resource Center to provide education about and evaluation of the Framework. The Framework is completely voluntary, and is meant to serve as

“a resource to the organizations designing, developing, deploying, or using AI systems to help manage the many risks of AI”.

High-level Summary

For me, the key takeaways from this document are:

We are on version 1.0 of an AI Risk Management framework. This is all new, and it will surely evolve.

There are inherent tensions in risk mitigation for AI that must be understood to best serve our patients

Developing a robust strategy for AI risk mitigation is a huge, organization-wide, multidisciplinary endeavor

AI risks are especially challenging because:

Benchmarks don’t yet exist, or are specific to communities or institutions

Human performance benchmarks may not have been captured

National benchmarks for AI risk haven’t been established

Real word risk will differ from testing risk, likely moreso than traditional software

Lack of transparency inherent in the deep learning process (“black box”) and lack of reproducibility

The NIST also notes that AI risks differ from traditional software risks:

The increased complexity and data requirements of AI systems can lead to more errors and privacy loss that are also more difficult to detect.

Increased opacity and concerns about reproducibility.

Underdeveloped software testing standards and inability to document AI-based practices to the standard expected of traditionally engineered software for all but the simplest of cases.

The NIST Risk Management Framework Summary

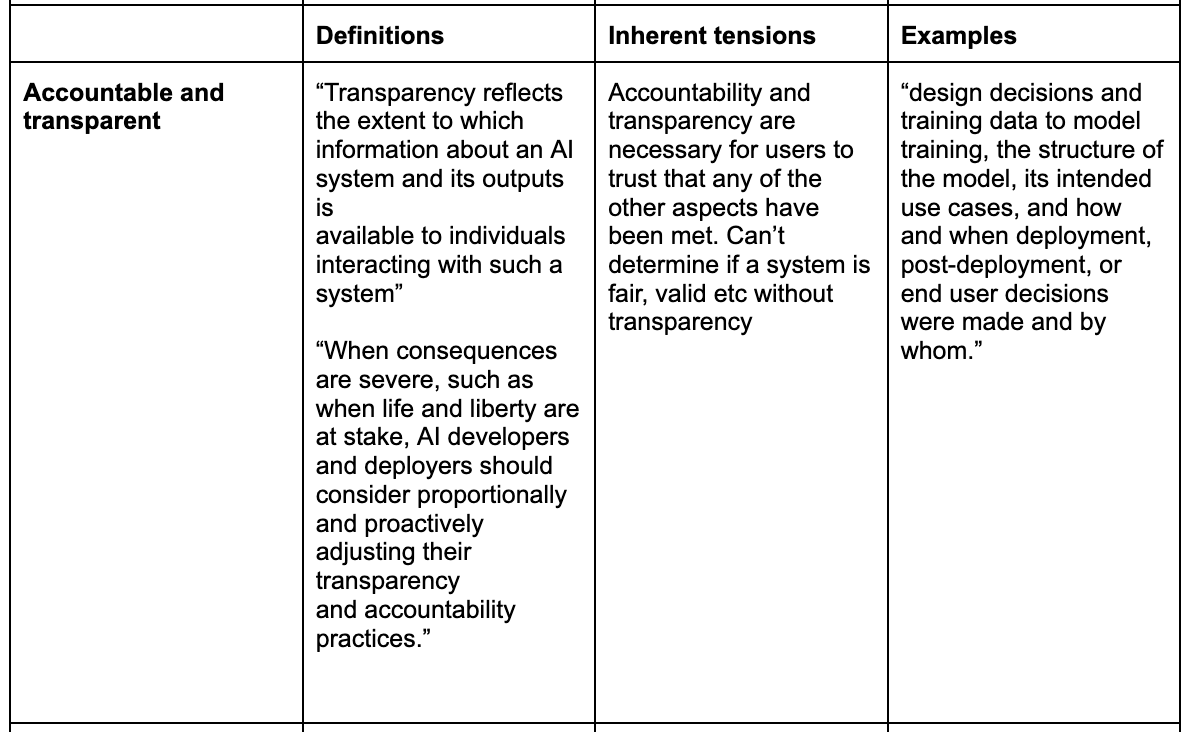

The NIST document gives a framework for AI risk that I’ve summarized in the table below. I’ve included the definitions, inherent tensions, and a healthcare example. The inherent tensions are especially important to consider because they demonstrate that no AI model or system can be zero risk; there’s always a tradeoff. Physicians are especially well-placed to understand and weigh these competing interests, and participate in decision-making related to these risk decisions.

The NIST then refers users to a Playbook, which is divided into four sections: Govern, Map, Measure, Manage.It’s very long and detailed. For example, there are 19 sections under “Govern”. One of them is “Mechanisms are in place to inventory AI systems and are resourced according to organizational risk priorities”, and then there is a section that describes what that means, gives suggested actions, and then suggested documentation. Most of the suggested actions relate to establishing organizational policies or approaches. With about 100 sections, this document is very comprehensive.

Summary

Last week, we discussed general AI risks that all businesses share. This week, we looked at how the government frames these risks and suggests mitigating them. Next week, we’ll look at how the FDA frames SaaS vs SaMD and the clinical risk.