Clinical AI Risk: Current and Future State

How to think about Clinical AI Risk and what every physician should ask for before using AI clinically

For this month’s Substacks, we’ve looked at different aspects of AI Risk:

Now we’ll use that knowledge to discuss Clinical AI Risk:

Why it’s hard to measure clinical risk

How AI introduces clinical risk

Risks of NOT using AI

The Clinician AI Tool Scorecard

Why is it so hard to measure clinical risk?

First, let’s imagine an ideal system for determining the clinical risk posed by an AI product that’s introduced to your clinic or hospital. You’d probably want to know:

What kinds of risks should I be aware of for this patient when I use AI?

What’s the worst case scenario with the AI tool in this situation?

That seems pretty straightforward. So why is it so hard to get an answer to this question?

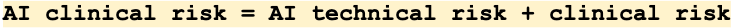

Part of the answer is that clinical risk assessment for AI includes both “normal” AI risk plus “normal” clinical risk.

And the likelihood and severity of each of those risks may also be very different: a risk may be very likely but not severe, or vice versa.

How AI clinical risk is introduced

Let’s think about the main ways risk is introduced with an AI tool, each of which also has an associated severity and likelihood:

Wrong information

Wrong workflow

Wrong use

Wrong Information

These are mostly covered by NIST Framework

Wrong workflow

These are similar to the 5 Rights of Medication Administration

Wrong use

These are similar to those identified in EHRs

Risks of not using AI

Crucially, there are also risks to not using AI.

Note: It’s important to understand the baseline that we’ve been willing to accept with medical errors. The numbers are very high and often change, but being cared for by humans carries inherent risks. Humans are often not rational, don’t communicate essential information as well as they think they do, give incorrect information, and aren’t consistent. Yet we’ve been willing to accept the limitations on what we can do for patients *because there was no good alternative*. It’s essential to remember that AI with zero risk doesn’t exist, just as humans with zero mistakes don’t exist.

It’s helpful to think about the risk of not using AI in the context of areas of possible harms, each of which has an associated severity and likelihood:

Harms to patients

Harms to systems

Harms to users

What would be helpful for clinicians using AI tools

We want a system that integrates information about the likelihood of possible harms and severities for specific patients with the different risks.

Getting back to the first two questions that physicians want to know:

What kinds of risks should I be aware of for this patient when I use AI?

What’s the worst case scenario with the AI tool in this situation?

Ideally, we’d have a quantitative clinical AI risk system that would include the possible harm of using AND not using AI in a specific setting.

In the future, I hope there are ML models that can calculate this risk for us, with the help of well-designed studies that quantify the change in real-world vs ideal use, for example, or likelihood of wrong diagnosis by AI and the clinician for a given patient.

Until then, we know that the highest risk arises if there's not a clinician in the loop who understands the function of the tool. When there’s no physician in the workflow for a patient’s care, there is no one to steer the ship and make sure the model and system are functioning how they should. When these tools are presented as substitutes for clinical judgment rather than additions, the risk is much higher. Any system that doesn’t include clinicians will be high-risk by definition.

Understanding the strengths and weaknesses of AI is a learned skill that will take years for all clinicians to be comfortable with. Physicians are trained and used to reading drug inserts and journal articles, but this technology is sufficiently new and complex to warrant a new approach.

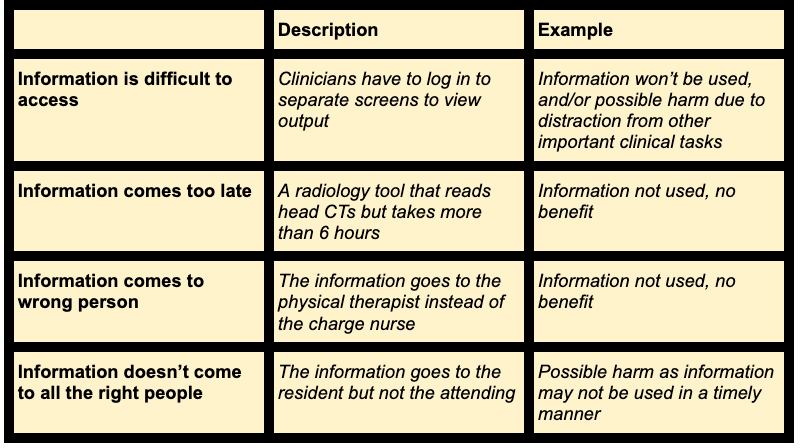

What I hope evolves is a clinical version of the model scorecard: The Clinician AI Tool Scorecard. This would be a short document including the factors below, plus additional information depending on the specialty and use:

Clinicians need an easy way to assess the AI tool and integrate its capabilities into their clinical knowledge and judgment. These kinds of summaries have been used for years by physicians in the forms of medication inserts, handbooks, pocket guides, and sites like UptoDate. Any AI tool with clinical impact should be required to provide this information to clinicians prior to use in an easy to understand format that (ideally) reflects that physician’s practice setting.

This Clinical AI Tool Scorecard would integrate the risks from the NIST, FDA, and others to provide actionable, concise information to clinicians. Someday, the scorecards could include quantitative risk data and be personalized to the patient. I’m hopeful that the physician community will advocate for access to this crucial information.

Are you a physician? Join the ML for MDs Slack group, where we share information and resources about the cutting edge of AI/ML in healthcare.