Can We Streamline the AI Governance Homework Packets?

A new, free crosswalk of NIST, CHAI, and RAISE

AI Governance Isn’t an Academic Exercise—So Why Do the Tools Feel Like Homework?

Because we’re the kind of irritating parents who assign homework, my kids do an hour of it every day, whether or not school is in session. This summer they’re doing the usual work plus French as we prepare for two months in France. That means I get to watch four humans struggle through various forms of homework for at least four cumulative hours a day.

Yesterday’s highlights included:

A discussion about 1770s marriage customs and their relationship to Hamilton and the Constitution

Multiple arguments over Spanish and French verb conjugations

Me tying a string to a stick to make a bow and arrow for reasons I still don’t fully understand

Watching them, I realized something: the facial expressions my kids make while trudging through busywork are exactly the same as the ones I see on healthcare AI vendors as they fill out AI governance questionnaires.

That combination of white-knuckled determination and quiet despair seemed a little too familiar.

But I also know that learning doesn’t have to be so painful. Kids naturally love learning (I know this because they demanded to continue to go to multiple minor temples in 120 degree heat in Egypt in August and were horrified that my husband and I considered getting back to air conditioning). But the learning has to be connected to their world, not just theoretical.

Valley of the Kings in southern Egypt

The Homework Problem in AI Governance

Right now, vendors are cobbling together responses to governance frameworks like CHAI, RAISE 3, and the NIST AI Risk Management Framework. They’re trying to figure out what they should be doing, how to document it, and which pages of which PDF will make them look responsible without committing to anything they’ll regret later.

To be fair, I actually like reading these frameworks. (Yes, I’m that person.) But I can admit: they are long and exhausting, and not very decision-oriented.

And just like with my kids’ homework, I find myself wishing we could spend more time in actual learning mode, less “this is the theory” and more “this is the practice and why it’s important”

Amidst checking my childrens’ homework, I read them all - so you don’t have to.

And I built a crosswalk so you can see what they agree on, where they diverge, and what none of them address at all.

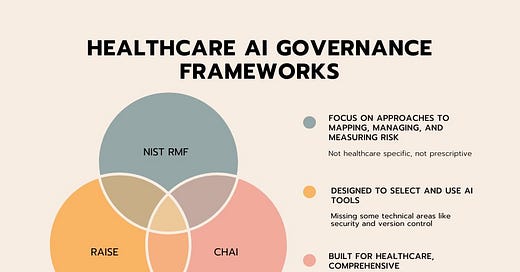

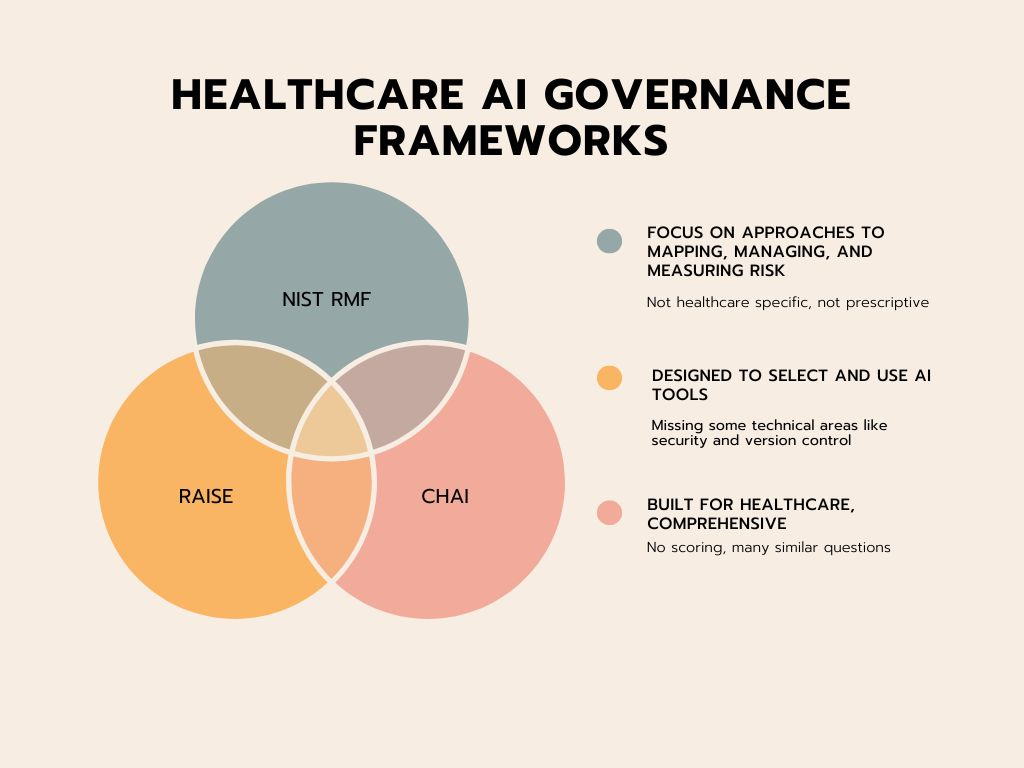

A Quick Tour of the Big Three

1. NIST AI Risk Management Framework (RMF)

Strengths:

Highly structured, risk-focused

Emphasizes lifecycle governance

Strong on mapping, measuring, and managing risk

Drawbacks:

Not healthcare-specific

Written for internal enterprise risk teams, not clinical buyers

No alignment with workflows or clinical edge cases

Comprehensive but not prescriptive

Where it aligns: System inventory, bias mitigation, incident response planning

2. CHAI Responsible AI Guide

Strengths:

Built for healthcare

Explicit inclusion of clinical relevance and lifecycle thinking

Drawbacks:

Hundreds of questions—overwhelming for most vendors

No scoring, prioritization, or real differentiation

Where it aligns: Clinical risk, fairness, independent validation, patient safety

3. RAISE 3

Strengths:

Clear, pragmatic questions

Focus on “selecting and using AI synthesis tools”

Drawbacks:

No scoring or risk thresholds

Misses key areas like security, version control, or lifecycle management

Where it aligns: Usability, human oversight, intended-use clarity

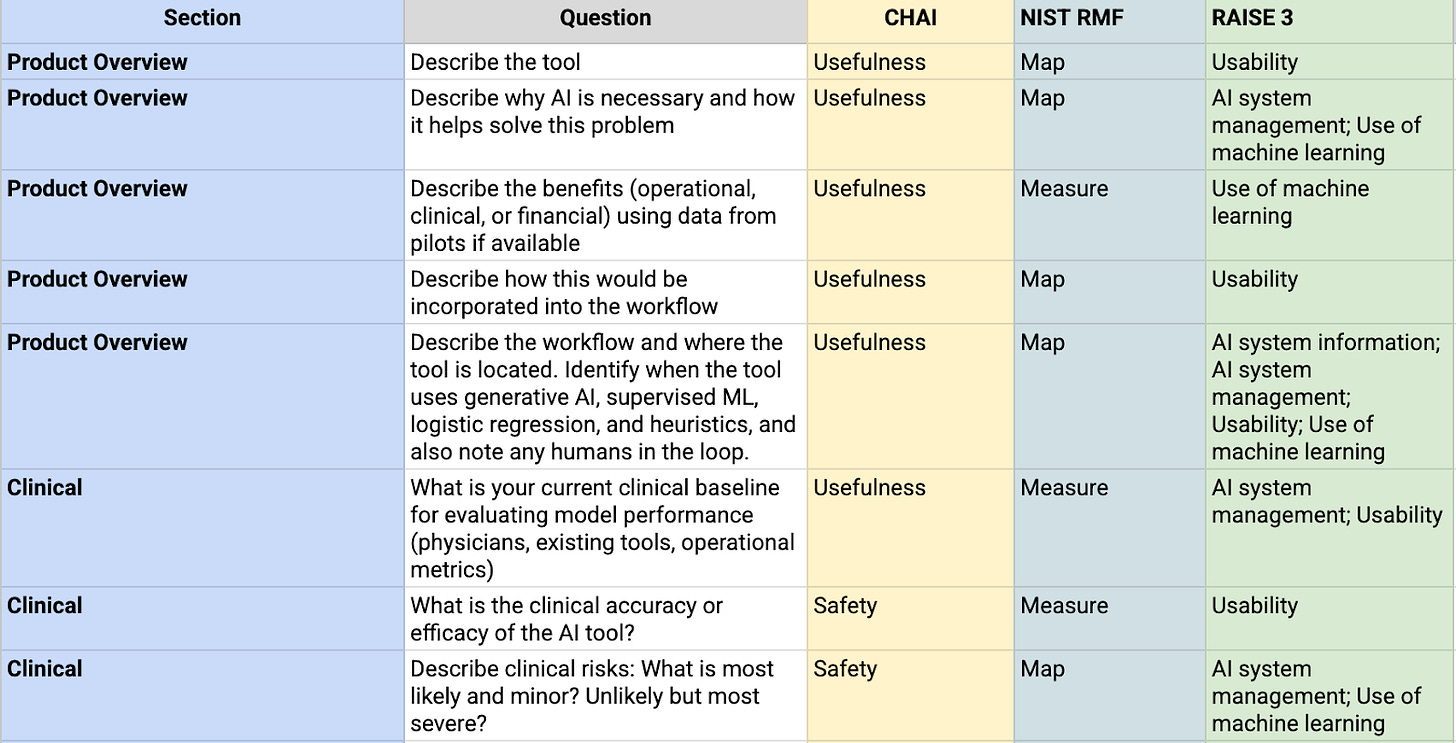

Framework Comparison at a Glance

New Crosswalk of AI Governance Frameworks

I built a crosswalk of 40+ questions that crosswalks them, free to use with attribution with the CC BY 4.0 license. Preview below:

What They Agree On—and What They All Miss

Across all three frameworks, there’s agreement on core governance principles:

Transparency

Human oversight

Fairness

Documentation

But none of them give us a way to:

Score or prioritize risks

Address real clinical failure modes (like alert fatigue or overreliance)

Connect model performance to workflow impact

From Homework to Real Learning

Just like my kids slogging through grammar drills while still wondering how to order a croissant in Paris, vendors are wading through multiple governance frameworks without a clear sense of what any of it actually means for building safer, more usable AI.

But it doesn’t have to be this way.

Governance shouldn’t feel like an obligation you grind through to get the grade. Done right, it should be the thing that helps you learn, improve, and build trust—because it’s connected to real-world outcomes, not just checkboxes. That’s why I built this crosswalk: not to add more homework, but to streamline it and identify the common core of what really matters.

And if you’re still stuck conjugating verbs in French, English, or on governance documents, feel free to get in touch.