AI Transparency: How many definitions is enough?

The ONC's 11b rule on Decision Support Intervention transparency

AI transparency can mean anything from describing how an AI system arrives at its conclusions (chain of thought or similar) to describing the training data in detail, to letting users know when AI was used in an interaction. This is a huge umbrella and is yet another way that two AI experts can easily think they’re agreeing on the same thing while actually discussing completely different topics.

Transparency usually defined as making information about the AI system openly available, but is sometimes conflated with AI explainability and observability. Explainability is understanding how an AI system makes specific decisions, and can be thought of as either intrinsic (for example, if it uses math we know how to explain like linear regression) or post-hoc (if it uses math that makes it hard for us to explain ahead of time like most powerful LLMs). Observability is monitoring the behavior of AI systems in real time or post-deployment, especially to detect drift, failures, or unintended consequences. Observability is more about picking up if the AI system is doing something unexpected.

Transparency was all the rage for a while but seems to have died down recently, perhaps as we collectively realize that many of the tools we use will be built on proprietary software that won’t reveal all its secrets, or because the focus has shifted toward outcomes rather than broadly hoping to peek inside an LLM.

I’ve made a table of the different types of transparency below:

The ONC has stepped into the ring of AI transparency with new Decision Support Interventions (DSI) certification criteria that aim to make healthcare AI more transparent. Let’s get into the details.

Who Does This Rule Apply To? (Spoiler: Not Everyone)

These requirements have a surprisingly narrow scope:

“Primarily applies to: Certified EHR vendors who develop, integrate, or supply predictive AI tools as part of their certified Health IT Modules.

Does not directly apply to:

Standalone AI tools developed by third-party companies (unless formally "supplied by" an EHR vendor)

Health system-developed algorithms

AI applications used alongside but not integrated into EHRs”

This creates what one industry briefing called a "transparency gradient" across the AI ecosystem. The critical phrase here is "supplied by" – when an EHR vendor takes stewardship of a predictive DSI (whether they built it themselves or integrated someone else's tool), they theoretically become responsible for providing all the required transparency information.

If you’re wondering what qualifies as predictive AI, here’s a helpful table from the ONC, in case anyone thought BMI calculations were suddenly AI-based decision support interventions.

This Is About Transparency, Not Efficacy

The ONC isn't trying to determine quality. Instead, they're creating a standardized transparency framework that helps users answer questions like:

Was this sepsis prediction model validated in populations similar to ours?

Does this risk calculator perform equitably across demographic groups?

How frequently is this algorithm retrained on current data?

As the regulation states, the requirements are "intended to provide users and the public greater information on whether a Predictive DSI is fair, appropriate, valid, effective, and safe" (the FAVES quality framework, which the ONC uses for all their AI evaluations).

The Technical Reality Gap: Where the Requirements Fall Short

There are some fundamental technical issues with this rule. The regulation was designed with relatively straightforward, single-model systems in mind. But modern healthcare AI is often far more complex:

1. Multi-Model Systems and Agent Architectures

Modern AI increasingly uses multiple models working together or agent-based architectures that dynamically invoke different algorithms. Think about:

LLM-based clinical documentation tools that might use one model for transcription, another for structuring data, and a third for suggesting clinical codes. Which model's "validity" should be reported?

Agent-based clinical pathways that dynamically invoke different algorithms based on changing patient data. These don't fit neatly into the static disclosure framework the ONC envisions.

2. Hybrid Systems

Many tools combine rules-based logic with predictive models. For example, a sepsis prediction tool might use both deterministic rules (like "alert if temperature > 101.5°F") and ML-derived predictions. The regulation creates an artificial divide between these approaches.

3. Continuous Learning Systems

Some AI systems employ continuous learning where the model updates itself based on new data. The DSI framework assumes more traditional model development with discrete training, validation, and deployment phases. This creates possible disclosure confusion:

How do you report "training data characteristics" for a model that's continuously evolving?

How often does "external validation" need to be performed in a continuously learning system?

4. Foundation Models and API Ecosystems

The regulations don't adequately address situations where:

A vendor uses a third-party foundation model they didn't train themselves

Multiple vendors contribute to a pipeline of AI processing

APIs are used to call external AI services within the certified health IT environment

The accountability and transparency requirements get murky in these cases.

Key Requirements: A Deeper Look

There are two main requirements: Attributes and an Intervention Risk Management (IRM) plan

1. Attributes

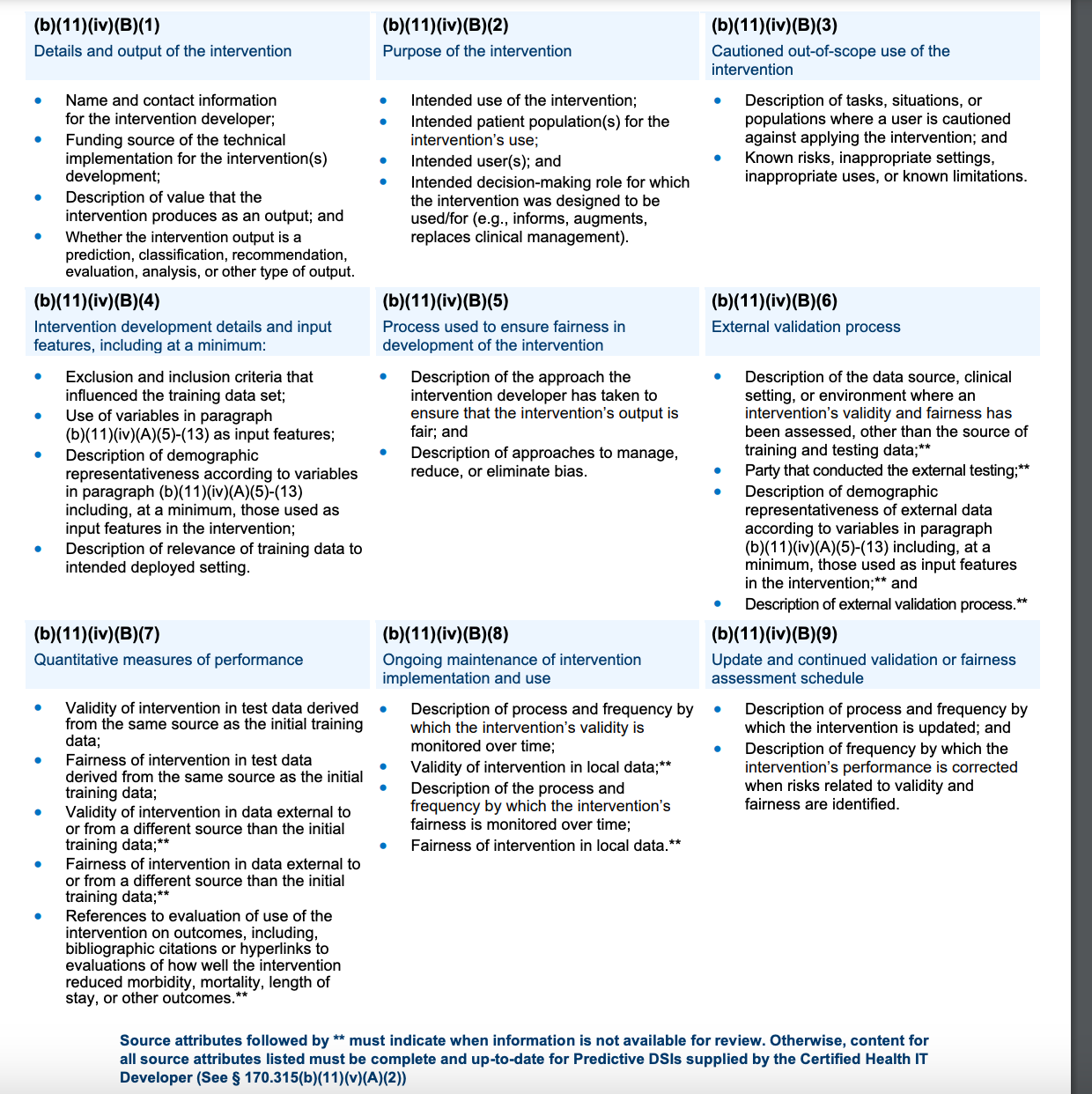

Health IT systems must support and display 31 attributes (in the table from ONC below):

Many of these are very similar to those suggested by CHAI’s model card

External validation is required, but not well-described or specified

“Cautioned out-of-scope use” should be similar to the “contraindications” in a drug insert

“Quantitative measures of performance” should demonstrate efficacy

“Intervention development details and input features” should describe the training data

Decision support interventions | HealthIT.gov

2. Intervention Risk Management (IRM)

EHR vendors are supposed to publicly document and implement practices to:

Analyze risks related to eight key characteristics: validity, reliability, robustness, fairness, intelligibility, safety, security, and privacy

Mitigate identified risks

Establish governance policies for data handling

The Timeline: Now?

You’ll be excited to know that I searched everywhere for the public information that was supposed to be available on January 1, 2025 as part of the risk mitigation program. Epic has a publicly facing fact sheet that has basically zero actual information (“We follow and enforce standard testing and project management processes that are designed to prevent and mitigate risk”). The government is supposed to have a list that’s available by hyperlink, but I emailed the ONC and was directed to place an online help ticket, which I did, and had my request closed out the next day without any explanation. If anyone else finds this, please let me know!

The Public/Private Information Balance

(Theoretically) Publicly available:

Summary information about risk management practices via accessible hyperlinks

Available only to health system users

Detailed source attributes

Performance metrics

Comprehensive risk details

As one think tank notes, the “final rule does not directly specify the model cards, nutrition labels, datasheets, data cards, or algorithmic audits required for compliance. Rather, the developer must choose which mechanism is most appropriate to communicate information about their AI tools and can choose a process that avoids sharing their AI models with an outside entity.” So it could be in a variety of different formats, and they can keep their proprietary information mostly proprietary.

Note that this information doesn’t have to be available to ALL users; it may only go to someone like the CMIO. This means it’s incumbent on the health system to distribute relevant information to practicing clinicians in a way they can actually make sense of and use in clinical practice. I hope some of you are working on those studies now!

What This Means for Future Regulation

This regulation may be the first step in a series of similar regulations requiring more transparency. While currently limited to EHR vendors and decision support interventions, it establishes principles that could expand to broader regulation of healthcare AI.

For healthcare organizations, these requirements may provide more information about some decision support tools, but how useful the information is to both the healthcare system and the clinicians actually using these tools is not yet clear.

For EHR vendors, compliance means new processes for documenting, maintaining, and disclosing information about the AI tools they supply. There’s also a requirement for external validation of DSI tools.

Because AI transparency has so many aspects, identifying the most crucial and highest impact areas for clinicians and patients will help us determine where to focus our transparency efforts going forward.

What's your take? Are these regulations a meaningful step forward or a bureaucratic hurdle?